A hot potato: The global AI industry is quietly crossing an energy threshold that could reshape power grids and climate commitments. New findings reveal that the electricity required to run advanced AI systems may surpass Bitcoin mining’s notorious energy appetite by late 2025, with implications that extend far beyond tech boardrooms.

The rapid expansion of generative AI has triggered a boom in data center construction and hardware production. As AI applications become more complex and are more widely adopted, the specialized hardware that powers them, accelerators from the likes of Nvidia and AMD, has proliferated at an unprecedented rate. This surge has driven a dramatic escalation in energy consumption, with AI expected to account for nearly half of all data center electricity usage by next year, up from about 20 percent today.

AI expected to account for nearly half of all data center electricity usage by next year, up from about 20 percent today.

This transformation has been meticulously analyzed by Alex de Vries-Gao, a PhD candidate at Vrije Universiteit Amsterdam’s Institute for Environmental Studies. His research, published in the journal Joule, draws on public device specifications, analyst forecasts, and corporate disclosures to estimate the production volume and energy consumption of AI hardware.

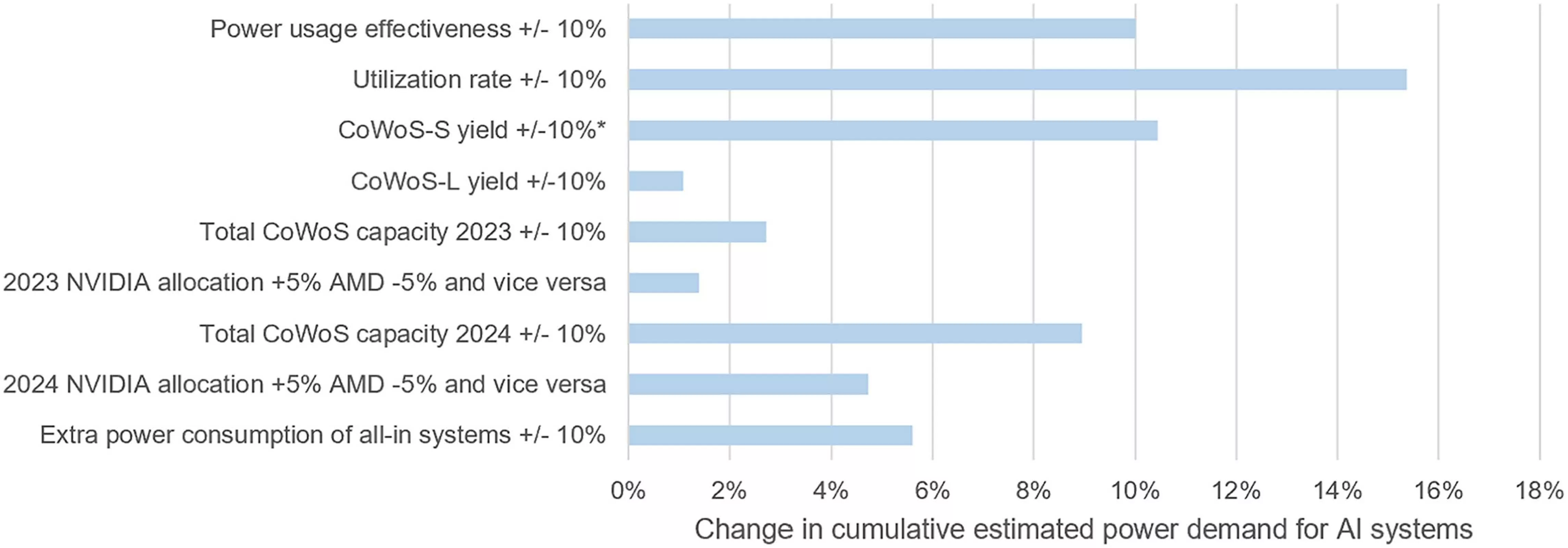

Because major tech firms rarely disclose the electricity consumption of their AI operations, de Vries-Gao used a triangulation method, examining the supply chain for advanced chips and the manufacturing capacity of key players such as TSMC.

The numbers tell a stark story. Each Nvidia H100 AI accelerator, a staple in modern data centers, consumes 700 watts continuously when running complex models. Multiply that by millions of units, and the cumulative energy draw becomes staggering.

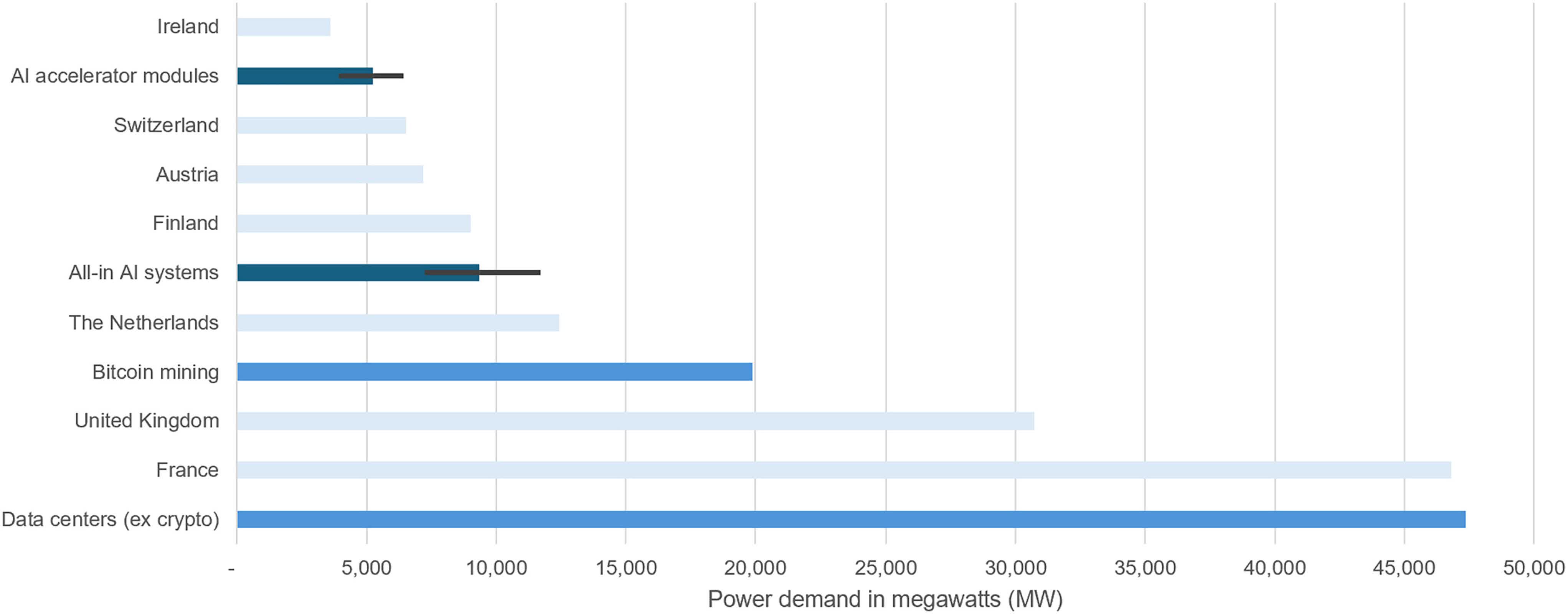

De Vries-Gao estimates that hardware produced in 2023 – 2024 alone could ultimately demand between 5.3 and 9.4 gigawatts, enough to eclipse Ireland’s entire national electricity consumption.

But the real surge lies ahead. TSMC’s CoWoS packaging technology allows powerful processors and high-speed memory to be integrated into single units, the core of modern AI systems. De Vries-Gao found that TSMC more than doubled its CoWoS production capacity between 2023 and 2024, yet demand from AI chipmakers like Nvidia and AMD still outstripped supply.

TSMC plans to double CoWoS capacity again in 2025. If current trends continue, de Vries-Gao projects that total AI system power needs could reach 23 gigawatts by the end of the year – roughly equivalent to the UK’s average national power consumption.

This would give AI a larger energy footprint than global Bitcoin mining. The International Energy Agency warns that this growth could single-handedly double the electricity consumption of data centers within two years.

While improvements in energy efficiency and increased reliance on renewable power have helped somewhat, these gains are being rapidly outpaced by the scale of new hardware and data center deployment. The industry’s “bigger is better” mindset – where ever-larger models are pursued to boost performance – has created a feedback loop of escalating resource use. Even as individual data centers become more efficient, overall energy use continues to rise.

Behind the scenes, a manufacturing arms race complicates any efficiency gains. Each new generation of AI chips requires increasingly sophisticated packaging. TSMC’s latest CoWoS-L technology, while essential for next-gen processors, struggles with low production yields.

Meanwhile, companies like Google report “power capacity crises” as they scramble to build data centers fast enough. Some projects are now repurposing fossil fuel infrastructure, with one securing 4.5 gigawatts of natural gas capacity specifically for AI workloads.

The environmental impact of AI depends heavily on where these power-hungry systems operate. In regions where electricity is primarily generated from fossil fuels, the associated carbon emissions can be significantly higher than in areas powered by renewables. A server farm in coal-reliant West Virginia, for example, generates nearly twice the carbon emissions of one in renewable-rich California.

Yet, tech giants rarely disclose where or how their AI operates – a transparency gap that threatens to undermine climate targets. This opacity makes it challenging for policymakers, researchers, and the public to fully assess the environmental implications of the AI boom.